A SCOPE REVIEW OF STUDIES ON FACIAL EXPRESSIONS OF EMOTION AND SIGN LANGUAGE

Rafael Silva Guilherme

UFJF – Universidade Federal de Juiz de Fora

Rosana Suemi Tokumaru

UFES – Universidade Federal do Espírito Santo

Recebido: 01/08/2023

Received: 01/08/2023

Aprovado: 28/09/2023

Approved: 28/09/2023

Publicado: 29/12/2023

Published: 29/12/2023

Abstract

Facial expressions of emotion are universal and understood by all human beings. However, for users of sign language, facial expressions are also used as linguistic markers. The objective of this study was to conduct a scoping review of scientific literature on the relationship between the use of sign language and the perception and recognition of facial expressions of emotion. Data were collected from APA PsycNET, Scientific Electronic Library Online – Scielo, and Scopus. A total of 376 publications were identified, and 15 articles were selected according to inclusion and exclusion criteria and PRISMA-ScR guidelines. Most of the publications were made with deaf signers compared to non-signing listeners. The main stimuli used in the studies were static images (photographs of facial expressions of emotion). Most studies did not find significant differences between signers participants with sign language knowledge and non-signers hearing participants.

Keywords: Nonverbal communication. Facial expression of emotion. Sign Language.

INTRODUCTION

Sign languages are a visual gestural linguistic modality, differing from oral auditory languages in which communication occurs through the production of words combined into sentences and perceived auditorily. In sign languages (SL), communication takes place through body and facial gestures, and the message is received visually through the eyes. Sign languages are not universal; therefore, there is a diversity of sign languages worldwide, and it is common to find several sign languages in the same territory. (Góes; Campos, 2018).

In Brazil, Brazilian Sign Language – Libras, gained linguistic recognition in 2002 through Law 10,463/02 (BRAZIL, 2002). Historically, the presence of the first school for the deaf in the country occurred in 1857 with the establishment of the then-called National Institute of Deaf Education – INES (Góes; Campos, 2018).

Currently, various research studies are being conducted in different areas of knowledge to study Libras, the deaf, and the Sign Language-Portuguese interpreter – TILSP, among others, in an attempt to understand the process of learning and translating this linguistic modality (Góes; Campos, 2018).

Facial expressions of emotion are considered an essential part of nonverbal communication, as they are visible manifestations of our emotional reactions through the activation of the facial muscles. The expression of facial emotions depends not only on the emotion itself but on many different factors, especially innate biological factors that all humans are born with, as well as environmental demands at a specific moment (Matsumoto; Hwang, 2016).

The facial expressions of emotion – joy, fear, sadness, anger, disgust, and surprise – are considered basic because they are universally recognized and can occur spontaneously or not. Emotions serve an adaptive function, playing a fundamental role in our survival and social interactions (Miguel, 2015).

In SL, facial expressions also have a linguistic function known as Non-Manual Markings (NMMs) (Liddell, 2003). These markings are a form of visual prosody expressed through facial expressions or body language, playing crucial roles in communication by indicating negative, affirmative, interrogative constructions, gaze direction, and also through eyebrow raising, mouth movement, among others (Santos, 2022). NMMs are present at the morphological and syntactic levels of sign language and are essential for the complete and accurate understanding of the conveyed message (Liddell, 2003; Santos, 2022).

Deaf and hearing children in their first year of life use basic facial expressions to express themselves and pick up emotional cues. With language acquisition, deaf children who are learning sign language face a dual task in which they must learn to use their face both emotionally and linguistically (Corina; Bellugi; Reilly, 1999). Corina, Bellugi and Reilly (1999) studied emotional and linguistic facial expressions in healthy deaf participants, deaf participants with right and/or left brain injury, and hearing individuals. The results indicated that affective expressions were mediated by the right hemisphere and linguistic expressions by the left hemisphere.

On the other hand, Lewis, Sullivan, and Vasen (1987) studied the basic facial expressions of babies, young children, and adult listeners and identified that children up to two years old were less expressive; children at three years old were more expressive in the emotions of happiness and surprise; children at four and five years old were better at producing emotions in the lower part of the face. Finally, adults were better at all emotions except fear and disgust. The results of these studies indicate that, despite facial expressions of emotion occurring from birth, the ability to recognize and produce facial expressions of emotion changes throughout development and social experience (Keating, 2016).

The works of Ekman and Friesen (1978) and Elfenbein and Ambady (2003) also showed the influence of culture on the display and recognition of facial expressions of emotion. Ekman and Friesen (1978) observed that Japanese and North Americans showed more similarities in the expression of emotions when they were alone than when they were in the presence of others. They coined the term “display rules” to refer to cultural learning about how to manage emotional displays in social situations. Elfenbein and Ambady (2003) coined the term “nonverbal accent” to refer to the particular characteristics of emotional expressions that differentiate cultural groups.

Considering that in sign languages, facial expressions of emotion are used linguistically, and there is evidence that social experience modifies the production and recognition of facial expressions of emotion, studies investigating the relationship between sign language learning and the production and recognition of facial expressions of emotion have been conducted (Corina; Bellugi; Reilly, 1999; De Vos; Van Der Kooij; Crasborn, 2009; Figueiredo; Lourenço, 2019; Grossman; Kegl, 2007; Xavier, 2019). Some studies have shown that negative emotional facial expressions were more recognized by deaf participants (disgust, sadness, and anger), while positive expressions (happiness) were more identified by hearing individuals (Jones; Gutierrez; Ludlow, 2018, 2021). In contrast to Dobel et al, (2020), which concluded that hearing individuals recognized anger expressions better and deaf individuals recognized happiness expressions better.

The studies on the recognition of EFE (Facial Emotional Expressions) in participants with knowledge in SL (Sign Language) also vary in terms of the emotions investigated. Some studies used all six basic emotions and a neutral expression (Goldstein; Sexton; Feldman, 2000; Jones; Gutierrez; Ludlow, 2018; Weisel, 1985), while others selected specific emotions (Dobel et al., 2020; Krejtz et al., 2019). The studies also differ in the way expressions are presented. Alves (2013) carried out a review of studies that compared the recognition of EFE presented statically or dynamically. It was concluded that dynamic facial expressions are more suitable for research on emotion recognition. In studies on emotion recognition in sign language users, there are researches that used static stimuli (Krejtz et al., 2019; Stoll et al., 2019), dynamic stimuli (Grossman; Kegl, 2007; Jones; Gutierrez; Ludlow, 2021), and mixed stimuli (Claudino et al., 2020; jones; Gutierrez; Ludlow, 2018).

Due to the diversity of studies on facial expressions of emotions in sign language users, it is worth noting that the heterogeneity of results may be related to differences in research designs or participant profiles (Stoll et al., 2019). In this regard, this work aims to evaluate the scientific production regarding the relationship between the use of sign language and the perception, recognition, and production of facial expressions of emotion. We intend to analyze the following characteristics in this production: the field of knowledge and the location of the research, the investigated population, the methodologies employed, and the results obtained. The goal is to assess the knowledge generated on the topic, identify gaps, and discuss possible avenues for future research.

Method

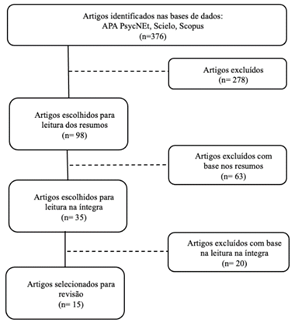

This is a scoping review study that involves the research and careful evaluation of published studies on the topic of facial expressions of emotion in participants with knowledge of sign language. To construct this scope, we adopted the guidelines of Tricco et al. (2018).

The guiding questions of this work were: What has been published about the relationship between EFE and LS? What are the main emotions investigated in these studies? What methods were used? What was the profile of the participants? What were the main results found in these studies?

For the description of the selection processes of the reviewed articles, PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation (Tricco et al., 2018) was used as a reference. The virtual databases searched were APA PsycNET, Scientific Electronic Library Online – Scielo, and Scopus. The descriptors used in English and Portuguese were: “non-verbal communication” OR “non-verbal language” AND “verbal language” AND “sign language” AND “facial expression” AND “emotion” AND “interpreter translator” AND “deaf” AND “recognition”.

The inclusion criteria were defined as follows: scientific articles, both national and international, published in English, Spanish, and Portuguese, in specialized journals or magazines indexed in the mentioned databases and available for full reading. There was no restriction on the publication year in the present study. Literature review articles, theses, dissertations, and publications that did not focus on the relationship between facial expressions of emotion and sign language were excluded. Finally, duplicate articles and abstracts without full text were excluded.

After the selection and reading of the articles, summaries were made. Microsoft Office Excel software was used to record the information of interest: author(s), publication year, article title, journal where the study was published, method, studied population, instruments used, emotions investigated, and main results.

Results

For the preparation of this study, 15 articles were selected following the inclusion and exclusion criteria (Figure 1) and in accordance with PRISMA-ScR criteria.

Picture 1 – Flowchart of articles

|

All the articles were published in English. Only six countries were identified with publications on the topic of EFE and SL (Table 1). It is noteworthy that the majority of publications took place in the United Kingdom. In Brazil we found only one publication.

Table 1 – Geographic distribution of articles

|

Countries of Publication |

N=(15) |

% |

|

Great Britain |

8 |

53,3 |

|

United States |

3 |

20 |

|

Israel |

2 |

13,3 |

|

Germany |

1 |

6,7 |

|

Brazil |

1 |

6,7 |

The oldest publication occurred in the year 1985, and the most recent one in 2021 (Table 2). The year with the highest number of publications was 2019. These data indicate that there is no significant discrepancy in the quantity of publications across the published years. When we consider the decades, we see a gradual increase in the number of publications. In the 1980s, there was only one publication; in the 1990s, there were three; in the 2000s, there were two; in the 2010s, there were six, and finally, between 2020 and 2022, three studies were found. From this analysis, it can be concluded that studies on facial expressions of emotion in Sign Languages have been intensified since the 21st century.

Table 2 – Temporal distribution of articles

|

Year of publication |

Articles |

% |

|

1985 |

1 |

6,7 |

|

1996 |

1 |

6,7 |

|

1997 |

2 |

13,3 |

|

2000 |

1 |

6,7 |

|

2007 |

1 |

6,7 |

|

2010 |

1 |

6,7 |

|

2017 |

2 |

13,3 |

|

2019 |

3 |

20 |

|

2020 |

2 |

13,3 |

|

2021 |

1 |

6,7 |

The majority of studies (40%) were published in journals related to psychology (general psychology; neuropsychology; cognitive and clinical psychology; social psychology). The remaining studies were published in journals with topics related to deafness, non-verbal communication, and visual perception and cognition (Table 3).

Table 3 – Thematic of the magazines

|

Diverse themes of the magazines |

N= 15 |

% |

|

Psychology (various areas) |

6 |

40 |

|

Deafness (diverse themes) |

5 |

33,3 |

|

Nonverbal communication |

2 |

13,3 |

|

Visual perception and cognition |

2 |

13,3 |

The majority of the reviewed studies focused on investigating the recognition of facial expressions of emotion (CLAUDINO et al., 2020; Dobel et al., 2020; Goldstein; Feldman, 1996; Grossman; Kegl, 2007; Jones et al., 2021; Krejtz et al., 2019; Ludlow et al., 2010; Sidera; Amadó; Martínez, 2017; Stoll et al., 2019; Weisel, 1985). Only two studies investigated the production of EFE (Goldstein; sexton; Feldman, 2000; jones; Gutierrez; Ludlow, 2010). The remaining studies, in addition to recognition, had other objectives, such as comparing the ability to recognize, memorize, and process EFE (Mccullough; Emmorey, 1997), comparing the ability to discriminate images of human faces (Bettger et al., 1997), comparing performance in processing central and peripheral information on the face in expressions of joy and fear and in the neutral face (Shalev et al., 2020). Moreover, in all studies, the research was conducted through comparisons between groups of individuals communicating through sign language, including deaf and hearing individuals, and compared with groups of hearing individuals who do not communicate in sign language.

Concerning the surveyed population, the majority (73%) investigated adult participants, while only 27% investigated children. Thirteen (87%) studies examined both deaf and hearing individuals, while only two (13%) studies exclusively investigated the hearing population. Lastly, the total number of hearing participants (N=648) was greater than the number of deaf participants (N=523).

Regarding the presentation format of emotional facial expressions (EFE) in the studies, it was observed that static images (photographs) were the most commonly used stimulus in the research (Table 4).).

Table 4 – Presentation format of EFE

|

Type of Stimulus |

N |

% |

|

Photography |

8 |

53,3 |

|

Photography and video |

2 |

13,3 |

|

Video |

5 |

33,3 |

Regarding EFE (Facial Emotional Expressions) used in the experiments, seven studies (47%) utilized the six basic emotions (happiness, sadness, anger, disgust, fear, and surprise) (Goldstein; Feldman, 1996; Goldstein; Sexton; Feldman, 2000; Jones; Gutierrez; Ludlow, 2018, 2021; Sidera; Amadó; Martínez, 2017; Stoll et al., 2019; Weisel, 1985). Five studies (33%) selected some emotions, not using all the basic EFE (CLAUDINO et al., 2020; Dobel et al., 2020; Krejtz et al., 2019; Ludlow et al., 2010; Shalev et al., 2020). One study (7%) used some basic emotions and grammatical facial expressions from a sign language (Grossman; Kegl, 2006). Finally, two studies (13%) did not provide information about EFE used (Bettger et al., 1997; Mccullough; Emmorey, 1997).

The reviewed studies investigated eight different sign languages. American Sign Language – ASL was the most studied language in deaf and signing participants (Table 5).

Table 5 – Sign Language Used in the Studies

|

Sign language |

N |

% |

|

American |

5 |

33,3 |

|

British |

3 |

20 |

|

Israeli |

2 |

13,3 |

|

Brazilian (Libras) |

1 |

6,7 |

|

Polish |

1 |

6,7 |

|

German |

1 |

6,7 |

|

Spanish |

1 |

6,7 |

|

Swiss |

1 |

6,7 |

In the data collection procedures of the studies, it was noticed that the majority of them (60%) used the visualization of Emotional Facial Expressions through a computer screen. In two studies (13%), EFE were presented to participants through printed images. In three studies (20%), participants' emotions were recorded and later analyzed by judges and software. Finally, only one study (7%) presented EFE to participants through a computer screen and also recorded participants' emotional reactions while they viewed the expressions. These different data collection procedures allowed for a diversified approach to emotions and their responses to the stimuli presented in the studies.

The main outputs of the reviewed studies show a variety of findings regarding the recognition of Facial Expressions of Emotion (FEE). Five studies did not find differences between the groups of participants with knowledge of sign language and non-signers hearing participants. (Krejtz et al., 2019; Mccullough; Emmorey, 1997; Shalev et al., 2020; Stoll et al., 2019; Weisel, 1985). Yet, three studies found that individuals who use some form of sign language, whether deaf or hearing, performed better in emotion recognition than the group of people without knowledge of sign language (Bettger et al., 1997; CLAUDINO et al., 2020; Goldstein; Feldman, 1996). Finally, two studies pointed out that the group of people without knowledge of sign language, in other words, without experience with the language, outperformed the group with knowledge of sign language in emotion recognition tasks (Grossman; Kegl, 2006; Ludlow et al., 2010).

In the two reviewed studies in which researchers investigated the production of facial expressions of emotion, participants using sign language displayed better performance in displaying facial expressions of emotion than the group of people who do not know sign language (Goldstein; Sexton; Feldman, 2000; Jones; Gutierrez; Ludlow, 2021).

Regarding recognition of emotions, most of the reviewed studies identified that joy is more recognized and produced than other emotions (Goldstein; Feldman, 1996; Krejtz et al., 2019; Shalev et al., 2020; Sidera; Amadó; Martínez, 2017; Stoll et al., 2019). Two studies pointed out that the emotion of “disgust” was more recognized by people using sign language compared to the group of people who do not know sign language (Goldstein; Sexton; Feldman, 2000; Weisel, 1985).

Regarding the stimuli and the intensity of Facial Expressions of Emotion (EFE) as perceived by participants, a study found that in dynamic stimuli, signing deaf individuals had more difficulties than both hearing signing and non-signing groups (Claudino et al., 2020). The intensity of EFE was assessed in recognition studies (Stoll et al., 2019) and in studies on the production of emotions (Jones; Gutierrez; Ludlow, 2021). In both studies, participants who used sign language showed a higher intensity in identifying and displaying EFE.

As for the direction of gaze towards the face, one study found that signing deaf participants focused more on the mouth to recognize EFE (Dobel et al., 2020). However, another study reported that signing deaf individuals directed their gaze less towards the mouth compared to non-signing hearing individuals (Krejtz et al., 2019).).

DISCUSSION

In this study, we conducted a state-of-the-art review of publications related to facial expressions of emotion and sign languages. We identified studies with deaf participants who use sign language and hearing individuals with knowledge of sign language. The results demonstrated that the earliest discussions found occurred in the 1980s. Most of the identified publications are recent, concentrated in the last decade. We believe that the growth of these publications may represent the recognition and visibility that studies of sign languages, deaf populations, and sign language interpreters have been receiving in recent years.

Facial expressions of emotion (EFE) are universal (Ekman, 1999); still, sign languages (SL) are not universal (Góes; Campos, 2018). They are visual-gestural languages specific to each country. Most of the reviewed publications studied EFE in deaf participants who use some form of sign language. Only two studies were conducted with hearing participants who use some form of sign language. In the investigated studies, we found eight different sign languages studied in six different countries.

We found only one Brazilian study on Libras (Brazilian Sign Language). We assume that the scarcity of studies in Brazil on Facial Expressions (EFE) and Libras is justified by the fact that the linguistic recognition of this language in the country is recent, through Law 10.463/02 and Decree 5.626/05, which discuss the training of professionals to work in teaching, translation, and interpretation of Libras (Brazil, 2002, 2005). Since then, there have been increasing research efforts in various fields (Azevedo; Giroto; Santana, 2015), especially in the areas of linguistics and deaf education (Lins; Nascimento, 2015; Pereira; Senhem, 2021). Furthermore, we assume that the absence of discussions about EFE as Non-Verbal Communication in Libras may be related to the interest of Brazilian studies in focusing on the grammatical facial expressions (Non-Manual Marking) of the language (Figueiredo; Lourenço, 2019; Pfau; Josep, 2010).

An aspect that deserves attention is that we found only two studies with hearing populations knowledgeable in sign language (Goldstein; Feldman, 1996; Goldstein; Sexton; Feldman, 2000). We conjecture that there are few studies on EFE (Written Brazilian Sign Language) with signing hearing populations, due to the fact that it is more commonly learned as a second language in this population, whereas for deaf populations, Sign Language (SL) can be acquired as a first language (Quadros, 2004; Quadros; Schmiedt, 2006).).

Regarding the stimuli used to demonstrate emotions, we observed a higher volume of publications that use static images, mainly photographs of human faces, with or without software manipulation, followed by dynamic stimuli, primarily videos. Alves (2013) investigated publications that used both static and dynamic expressions and criticized the use of static stimuli, reinforcing the hypothesis that dynamic expressions are ecologically valid stimuli. However, in our review, in addition to finding a greater number of studies that use static stimuli, we also identified that the use of this methodology aligned with the objectives pursued by researchers and contributed to advancing knowledge about the differences in recognition and production abilities of emotional facial expressions (EFE) in different groups. Based on the results of this review, it is suggested that the use of different methodologies can reveal different aspects of the recognition and production of EFE.

These different features of the recognition and production of Sign Language (SL) become evident from the results obtained in the studies by Jones et al. (2018). Deaf individuals recognized dynamic images better than static ones at both high and low intensity levels. In comparison to the two control groups (deaf and hearing individuals without SL knowledge), it was discovered that deaf children performed worse in recognizing emotions with static images. This was also observed in the studies by Bould & Morris (2008). Participants achieved better results in the dynamic condition. Both authors claim that deaf individuals rely on visual and contextual emotion cues, as well as linguistic markers of SL. Therefore, dynamic facial expressions would be more recognizable to the deaf. The criticism made is that tasks involving static images reflect everyday stimuli less accurately because the movement of signs associated with the emergence of facial expressions can provide information for the facial expression recognition process (Bould; Morris, 2008).

The different findings in the reviewed studies show that the recognition and production of FSE (Facial Sign Expressions) can be influenced by various factors, including knowledge and experience with sign language. These variations highlight the complexity of the interaction between language and the recognition of FSE and underscore the importance of continuing to investigate this topic to gain a more comprehensive understanding of how these factors can affect the perception and expression of emotions.

Despite this study presenting a review of various research findings in the literature regarding the recognition of facial expressions in people with knowledge of sign language (deaf and hearing individuals), it is important to highlight some limitations: a) although we used three databases to search for articles, other relevant articles may not have been included because they were not present in these databases; b) the heterogeneity of participants in different studies (deaf individuals, sign language users, children, and adults) may have affected the generalizability of the analysis presented; c) the investigated facial expressions varied among the studies, making it difficult to generalize the presented analysis.

FINAL CONSIDERATIONS

In this study, we reviewed previous research that addressed the recognition and display of facial expressions of emotion in people with sign language knowledge, including both deaf and hearing individuals. Nevertheless, we noticed that there is a shortage of studies addressing this topic, suggesting that research in this area is still relatively limited.

Upon analyzing the results of the reviewed studies, we observed a variety of findings, with some studies indicating that individuals with sign language knowledge did not perform better in recognizing and producing facial expressions of emotion compared to the group of individuals without sign language knowledge. However, we identified that the majority of the reviewed studies did not find differences between participants with sign language knowledge and those without sign language knowledge. This contrasts with the initial expectation that participants with sign language knowledge might have an advantage in this task.

Several factors may be influencing this diversity of results. One of them is the variety of stimuli used in the studies, which might impact how facial expressions are perceived and interpreted by the participants. Additionally, the profile of the participants, including their experience with sign language and their ability to read facial expressions, may be an important factor.

This complexity in the results highlights the need to continue researching and investigating the relationship between knowledge in sign language and the recognition of facial expressions of emotion. New studies with more detailed and specific approaches can help clarify this issue and contribute to the advancement of knowledge about emotions in sign language communication.

REFERENCES

ALVES, N. T. Recognition of static and dynamic facial expressions: a study review. Estudos de Psicologia (Natal), v. 18, n. 1, p. 125–130, mar. 2013.

AZEVEDO, C. B. DE; GIROTO, C. R. M.; SANTANA, A. P. DE O. Produção científica na área da surdez: Análise dos artigos publicados na Revista Brasileira de Educação Especial no período de 1992 a 2013. Revista Brasileira de Educação Especial, v. 21, p. 459-476, 2015.

BETTGER, J. G. et al. Enhanced facial discrimination: effects of experience with American sign language. Journal of deaf studies and deaf education, v. 2, n. 4, p. 223–233, 1 jan. 1997.

BOULD, E.; MORRIS, N. Role of motion signals in recognizing subtle facial expressions of emotion. British Journal of Psychology, v. 99, n. 2, p. 167–189, maio 2008.

BRASIL. Lei no 10.436, de 24 de abril de 2002. Dispõe sobre a Língua Brasileira de Sinais-Libras e dá outras providências. [s.l.] Diário Oficial da União, 2002.

BRASIL. Decreto no 5.626, de 22 de dezembro de 2005. Regulamenta a Lei no 10.436, de 24 de abril de 2002, que dispõe sobre a Língua Brasileira de Sinais-Libras. [s.l.] Diário Oficial da União, 2005.

CLAUDINO, R. G. E et al. Use of sign language does not favor recognition of static and dynamic emotional faces in deaf people. Psychology & Neuroscience, v. 13, n. 4, p. 531–538, dez. 2020.

CORINA, D. P.; BELLUGI, U.; REILLY, J. Neuropsychological studies of linguistic and affective facial expressions in deaf signers. Language and Speech, v. 42, n. 2–3, p. 307–331, 1999.

DE VOS, C.; VAN DER KOOIJ, E.; CRASBORN, O. Mixed Signals: Combining Linguistic and Affective Functions of Eyebrows in Questions in Sign Language of the Netherlands. Language and Speech, v. 52, n. 2–3, p. 315–339, 3 jun. 2009.

DOBEL, C. et al. Deaf signers outperform hearing non-signers in recognizing happy facial expressions. Psychological Research, v. 84, n. 6, p. 1485–1494, 1 set. 2020.

EKMAN, P. Facial expression. Handbook of cognition and emotion, v. 16, p. 301–320, 1999.

EKMAN, P.; FRIESEN, W. V. Facial action coding system. [s.l.] Environmental Psychology y Nonverbal Behavior, 1978.

ELFENBEIN, H. A.; AMBADY, N. Universals and Cultural Differences in Recognizing Emotions. Current Directions in Psychological Science, v. 12, n. 5, p. 159–164, 24 out. 2003.

FIGUEIREDO, L. M. B.; LOURENÇO, G. O movimento de sobrancelhas como marcador de domínios sintáticos na Língua Brasileira de Sinais. Revista da Anpoll, v. 1, n. 48, p. 78–102, 25 jun. 2019.

GÓES, A. M.; CAMPOS, M. DE L. I. L. Aspecto da Gramática da Libras. Em: LACERDA, C. B. F.; SANTOS, L. F. (Eds.). Tenho um aluno surdo e agora? Introdução a Libras e educação dos surdos. EdUFSCar ed. [s.l.] : 2008. p. 65–80.

GOLDSTEIN, N. E.; FELDMAN, R. S. Knowledge of American Sign Language and the ability of hearing individuals to decode facial expressions of emotion. Journal of Nonverbal Behavior, v. 20, n. 2, p. 111–122, 1996.

GOLDSTEIN, N. E.; SEXTON, J.; FELDMAN, R. S. Encoding of facial expressions of emotion and knowledge of American sign language. Journal of Applied Social Psychology, v. 30, n. 1, p. 67–76, 2000.

GROSSMAN, R. B.; KEGL, J. To capture a face: A novel technique for the analysis and quantification of facial expressions in American Sign Language. Sign Language Studies, v. 6, n. 3, mar. 2006.

GROSSMAN, R. B.; KEGL, J. Moving faces: Categorization of dynamic facial expressions in American sign language by deaf and hearing participants. Journal of Nonverbal Behavior, v. 31, n. 1, p. 23–38, mar. 2007.

JONES, A. C.; GUTIERREZ, R.; LUDLOW, A. K. The role of motion and intensity in deaf children’s recognition of real human facial expressions of emotion. Cognition & emotion, v. 32, n. 1, p. 102–115, 2 jan. 2018.

JONES, A. C.; GUTIERREZ, R.; LUDLOW, A. K. Emotion production of facial expressions: A comparison of deaf and hearing children. Journal of Communication Disorders, v. 92, 1 jul. 2021.

KEATING, C. F. The developmental arc of nonverbal communication: Capacity and consequence for human social bonds. Em: APA handbook of nonverbal communication. Washington: American Psychological Association, 2016. p. 103–138.

KREJTZ, I. et al. Attention Dynamics During Emotion Recognition by Deaf and Hearing Individuals. The Journal of Deaf Studies and Deaf Education, 29 out. 2019.

LEWIS, M.; SULLIVAN, M. W.; VASEN, A. Making faces: Age and emotion differences in the posing of emotional expressions. Developmental Psychology, v. 23, n. 5, p. 690–697, set. 1987.

LIDDELL, S. K. Grammar, Gesture, and Meaning in American Sign Language. [s.l.] Cambridge University Press, 2003.

LINS, H. A. DE M.; NASCIMENTO, L. C. R. Algumas tendências e perspectivas em artigos publicados de 2009 a 2014 sobre surdez e educação de surdos. Pro-Posições, v. 26, n. 3, p. 27–40, dez. 2015.

LUDLOW, A. et al. Emotion recognition in children with profound and severe deafness: Do they have a deficit in perceptual processing? Journal of Clinical and Experimental Neuropsychology, DOI: http://dx.doi.org/10.1080/13803391003596447 , v. 32, n. 9, p. 923–928, nov. 2010.

MATSUMOTO, D.; HWANG, H. C. The cultural bases of nonverbal communication. Em: APA handbook of nonverbal communication. Washington: American Psychological Association, 2016. p. 77–101.

MCCULLOUGH, S.; EMMOREY, K. Face Processing by Deaf ASL Signers: Evidence for Expertise in Distinguishing Local Features. Journal of Deaf Studies and Deaf Education, v. 2, n. 4, p. 212–222, 1 jan. 1997.

MIGUEL, F. K. Psicologia das emoções: uma proposta integrativa para compreender a expressão emocional. Psico-USF, v. 20, n. 1, p. 153–162, abr. 2015.

PEREIRA, I.; SENHEM, P. R. ATUALIZAÇÕES SOBRE INTERNACIONALIZAÇÃO DA EDUCAÇÃO DE SURDOS. Revista e-Curriculum, v. 19, n. 2, p. 729–747, 30 jun. 2021.

PFAU, R.; JOSEP, Q. Nonmanuals: their grammatical and prosodic roles. Cambridge University, p. 381–402, 2010.

QUADROS, R. M. Língua de sinais brasileira: estudos linguísticos. Artmed. ed. [s.l.]: 2004.

QUADROS, R. M.; SCHMIEDT, M. L. Ideias para ensinar português para alunos surdos. Brasılia: Mec, SEESP ed. [s.l.]: 2004.

SANTOS, H. R. TRAÇOS NÃO MANUAIS DE EVENTUALIDADES EM LIBRAS. Estudos Linguísticos e Literários, n. 72, p. 126–148, 10 maio 2022.

SHALEV, T. et al. Do deaf individuals have better visual skills in the periphery? Evidence from processing facial attributes. Visual Cognition, v. 28, n. 3, p. 205–217, 15 mar. 2020.

SIDERA, F.; AMADÓ, A.; MARTÍNEZ, L. Influences on Facial Emotion Recognition in Deaf Children. Journal of deaf studies and deaf education, v. 22, n. 2, p. 164–177, 1 abr. 2017.

STOLL, C. et al. Quantifying Facial Expression Intensity and Signal Use in Deaf Signers. The Journal of Deaf Studies and Deaf Education, v. 24, n. 4, p. 346–355, 1 out. 2019.

TRICCO, A. C. et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Annals of Internal Medicine, v. 169, n. 7, p. 467–473, 2 out. 2018.

WEISEL, A. Deafness and perception of nonverbal expression of emotion. (“Deafness and Perception of Nonverbal Expression of Emotion – SAGE Journals”) Perceptual and motor skills, v. 61, n. 2, p. 515–522, 1985.

XAVIER, A. N. Análise preliminar de expressões não-manuais lexicais na libras. Revista Intercâmbio, v. XL, p. 41–66, 2019.

Rafael Silva Guilherme

Doctor from the Universidade Federal of Espírito Santo (UFES) and master’s degree from the Pontifícia Universidade Católica of Minas Gerais (PUC Minas) in Psychology. Graduated in Portuguese Language from the Universidade Federal of Lavras (UFLA), Social Work from the Pontifícia Universidade Católica of Minas Gerais (PUC Minas), and Assistive Communication in Brazilian Sign Language (Libras)/Braille from the Pontifícia Universidade Católica of Minas Gerais (PUC Minas).

Rosana Suemi Tokumaru

Tenured faculty member at the Universidade Federal of Juiz de Fora (UFJF). Professor and translator/interpreter of Brazilian Sign Language (Libras) – Portuguese.

The texts of this article were reviewed by third parties and submitted for validation by the author(s) prior to publication